---

title: 'dagster cli'

title_meta: 'dagster cli API Documentation - Build Better Data Pipelines | Python Reference Documentation for Dagster'

description: 'dagster cli Dagster API | Comprehensive Python API documentation for Dagster, the data orchestration platform. Learn how to build, test, and maintain data pipelines with our detailed guides and examples.'

last_update:

date: '2025-12-10'

custom_edit_url: null

---

# Dagster CLI

## dagster asset

Commands for working with Dagster assets.

```shell

dagster asset [OPTIONS] COMMAND [ARGS]...

```

Commands:

list

List assets

materialize

Execute a run to materialize a selection of assets

Clears the asset partitions status cache, which is used by the webserver to load partition

>

pages more quickly. The cache will be rebuilt the next time the partition pages are loaded,

if caching is enabled.

Usage:

>

dagster asset wipe-cache –all

dagster asset wipe-cache \

dagster asset wipe-cache \

## dagster debug

Commands for helping debug Dagster issues by dumping or loading artifacts from specific runs.

This can be used to send a file to someone like the Dagster team who doesn’t have direct access

to your instance to allow them to view the events and details of a specific run.

Debug files can be viewed using dagster-webserver-debug cli.

Debug files can also be downloaded from the Dagster UI.

```shell

dagster debug [OPTIONS] COMMAND [ARGS]...

```

Commands:

export

Export the relevant artifacts for a job run from the current instance in to a file.

import

Import the relevant artifacts from debug files in to the current instance.

## dagster definitions validate

The dagster definitions validate command loads and validate your Dagster definitions using a Dagster instance.

This command indicates which code locations contain errors, and which ones can be successfully loaded.

Code locations containing errors are considered invalid, otherwise valid.

When running, this command sets the environment variable DAGSTER_IS_DEFS_VALIDATION_CLI=1.

This environment variable can be used to control the behavior of your code in validation mode.

This command returns an exit code 1 when errors are found, otherwise an exit code 0.

This command should be run in a Python environment where the dagster package is installed.

```shell

dagster definitions validate [OPTIONS]

```

Options:

-v, --verbose

Show verbose stack traces, including system frames in stack traces.

--load-with-grpc

Load the code locations using a gRPC server, instead of in-process.

--log-format \

Format of the logs for dagster services

Default: `'colored'`Options: colored | json | rich

--log-level \

Set the log level for dagster services.

Default: `'info'`Options: critical | error | warning | info | debug

--empty-workspace

Allow an empty workspace

-w, --workspace \

Path to workspace file. Argument can be provided multiple times.

-d, --working-directory \

Specify working directory to use when loading the repository or job

-f, --python-file \

Specify python file or files (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each file as a code location in the current python environment.

-m, --module-name \

Specify module or modules (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each module as a code location in the current python environment.

--autoload-defs-module-name \

A module to import and recursively search through for definitions.

--package-name \

Specify Python package where repository or job function lives

-a, --attribute \

Attribute that is either a 1) repository or job or 2) a function that returns a repository or job

--grpc-port \

Port to use to connect to gRPC server

--grpc-socket \

Named socket to use to connect to gRPC server

--grpc-host \

Host to use to connect to gRPC server, defaults to localhost

--use-ssl

Use a secure channel when connecting to the gRPC server

Environment variables:

DAGSTER_WORKING_DIRECTORY

>

Provide a default for [`--working-directory`](#cmdoption-dagster-definitions-validate-d)

DAGSTER_PYTHON_FILE

>

Provide a default for [`--python-file`](#cmdoption-dagster-definitions-validate-f)

DAGSTER_MODULE_NAME

>

Provide a default for [`--module-name`](#cmdoption-dagster-definitions-validate-m)

DAGSTER_autoload_defs_module_name

>

Provide a default for [`--autoload-defs-module-name`](#cmdoption-dagster-definitions-validate-autoload-defs-module-name)

DAGSTER_PACKAGE_NAME

>

Provide a default for [`--package-name`](#cmdoption-dagster-definitions-validate-package-name)

DAGSTER_ATTRIBUTE

>

Provide a default for [`--attribute`](#cmdoption-dagster-definitions-validate-a)

## dagster dev

Start a local deployment of Dagster, including dagster-webserver running on localhost and the dagster-daemon running in the background

```shell

dagster dev [OPTIONS]

```

Options:

--code-server-log-level \

Set the log level for code servers spun up by dagster services.

Default: `'warning'`Options: critical | error | warning | info | debug

--log-level \

Set the log level for dagster services.

Default: `'info'`Options: critical | error | warning | info | debug

--log-format \

Format of the logs for dagster services

Default: `'colored'`Options: colored | json | rich

-p, --port, --dagit-port \

Port to use for the Dagster webserver.

-h, --host, --dagit-host \

Host to use for the Dagster webserver.

--live-data-poll-rate \

Rate at which the dagster UI polls for updated asset data (in milliseconds)

Default: `'2000'`

--use-legacy-code-server-behavior

Use the legacy behavior of the daemon and webserver each starting up their own code server

-v, --verbose

Show verbose stack traces for errors in the code server.

--use-ssl

Use a secure channel when connecting to the gRPC server

--grpc-host \

Host to use to connect to gRPC server, defaults to localhost

--grpc-socket \

Named socket to use to connect to gRPC server

--grpc-port \

Port to use to connect to gRPC server

-a, --attribute \

Attribute that is either a 1) repository or job or 2) a function that returns a repository or job

--package-name \

Specify Python package where repository or job function lives

--autoload-defs-module-name \

A module to import and recursively search through for definitions.

-m, --module-name \

Specify module or modules (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each module as a code location in the current python environment.

-f, --python-file \

Specify python file or files (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each file as a code location in the current python environment.

-d, --working-directory \

Specify working directory to use when loading the repository or job

-w, --workspace \

Path to workspace file. Argument can be provided multiple times.

--empty-workspace

Allow an empty workspace

Environment variables:

DAGSTER_ATTRIBUTE

>

Provide a default for [`--attribute`](#cmdoption-dagster-dev-a)

DAGSTER_PACKAGE_NAME

>

Provide a default for [`--package-name`](#cmdoption-dagster-dev-package-name)

DAGSTER_autoload_defs_module_name

>

Provide a default for [`--autoload-defs-module-name`](#cmdoption-dagster-dev-autoload-defs-module-name)

DAGSTER_MODULE_NAME

>

Provide a default for [`--module-name`](#cmdoption-dagster-dev-m)

DAGSTER_PYTHON_FILE

>

Provide a default for [`--python-file`](#cmdoption-dagster-dev-f)

DAGSTER_WORKING_DIRECTORY

>

Provide a default for [`--working-directory`](#cmdoption-dagster-dev-d)

## dagster instance

Commands for working with the current Dagster instance.

```shell

dagster instance [OPTIONS] COMMAND [ARGS]...

```

Commands:

concurrency

Commands for working with the instance-wide op concurrency.

info

List the information about the current instance.

migrate

Automatically migrate an out of date instance.

reindex

Rebuild index over historical runs for performance.

## dagster job

Commands for working with Dagster jobs.

```shell

dagster job [OPTIONS] COMMAND [ARGS]...

```

Commands:

backfill

Backfill a partitioned job.

This commands targets a job. The job can be specified in a number of ways:

1. dagster job backfill -j \<\> (works if .workspace.yaml exists)

2. dagster job backfill -j \<\> -w path/to/workspace.yaml

3. dagster job backfill -f /path/to/file.py -a define_some_job

4. dagster job backfill -m a_module.submodule -a define_some_job

5. dagster job backfill -f /path/to/file.py -a define_some_repo -j \<\>

6. dagster job backfill -m a_module.submodule -a define_some_repo -j \<\>

execute

Execute a job.

This commands targets a job. The job can be specified in a number of ways:

1. dagster job execute -f /path/to/file.py -a define_some_job

2. dagster job execute -m a_module.submodule -a define_some_job

3. dagster job execute -f /path/to/file.py -a define_some_repo -j \<\>

4. dagster job execute -m a_module.submodule -a define_some_repo -j \<\>

launch

Launch a job using the run launcher configured on the Dagster instance.

This commands targets a job. The job can be specified in a number of ways:

1. dagster job launch -j \<\> (works if .workspace.yaml exists)

2. dagster job launch -j \<\> -w path/to/workspace.yaml

3. dagster job launch -f /path/to/file.py -a define_some_job

4. dagster job launch -m a_module.submodule -a define_some_job

5. dagster job launch -f /path/to/file.py -a define_some_repo -j \<\>

6. dagster job launch -m a_module.submodule -a define_some_repo -j \<\>

list

List the jobs in a repository. Can only use ONE of –workspace/-w, –python-file/-f, –module-name/-m, –grpc-port, –grpc-socket.

print

Print a job.

This commands targets a job. The job can be specified in a number of ways:

1. dagster job print -j \<\> (works if .workspace.yaml exists)

2. dagster job print -j \<\> -w path/to/workspace.yaml

3. dagster job print -f /path/to/file.py -a define_some_job

4. dagster job print -m a_module.submodule -a define_some_job

5. dagster job print -f /path/to/file.py -a define_some_repo -j \<\>

6. dagster job print -m a_module.submodule -a define_some_repo -j \<\>

scaffold_config

Scaffold the config for a job.

This commands targets a job. The job can be specified in a number of ways:

1. dagster job scaffold_config -f /path/to/file.py -a define_some_job

2. dagster job scaffold_config -m a_module.submodule -a define_some_job

3. dagster job scaffold_config -f /path/to/file.py -a define_some_repo -j \<\>

4. dagster job scaffold_config -m a_module.submodule -a define_some_repo -j \<\>

## dagster run

Commands for working with Dagster job runs.

```shell

dagster run [OPTIONS] COMMAND [ARGS]...

```

Commands:

delete

Delete a run by id and its associated event logs. Warning: Cannot be undone

list

List the runs in the current Dagster instance.

migrate-repository

Migrate the run history for a job from a historic repository to its current repository.

wipe

Eliminate all run history and event logs. Warning: Cannot be undone.

## dagster schedule

Commands for working with Dagster schedules.

```shell

dagster schedule [OPTIONS] COMMAND [ARGS]...

```

Commands:

debug

Debug information about the scheduler.

list

List all schedules that correspond to a repository.

logs

Get logs for a schedule.

preview

Preview changes that will be performed by dagster schedule up.

restart

Restart a running schedule.

start

Start an existing schedule.

stop

Stop an existing schedule.

wipe

Delete the schedule history and turn off all schedules.

## dagster sensor

Commands for working with Dagster sensors.

```shell

dagster sensor [OPTIONS] COMMAND [ARGS]...

```

Commands:

cursor

Set the cursor value for an existing sensor.

list

List all sensors that correspond to a repository.

preview

Preview an existing sensor execution.

start

Start an existing sensor.

stop

Stop an existing sensor.

## dagster project

Commands for bootstrapping new Dagster projects and code locations.

```shell

dagster project [OPTIONS] COMMAND [ARGS]...

```

Commands:

from-example

Download one of the official Dagster examples to the current directory. This CLI enables you to quickly bootstrap your project with an officially maintained example.

list-examples

List the examples that available to bootstrap with.

scaffold

Create a folder structure with a single Dagster code location and other files such as pyproject.toml. This CLI enables you to quickly start building a new Dagster project with everything set up.

scaffold-code-location

(DEPRECATED; Use dagster project scaffold –excludes README.md instead) Create a folder structure with a single Dagster code location, in the current directory. This CLI helps you to scaffold a new Dagster code location within a folder structure that includes multiple Dagster code locations.

scaffold-repository

(DEPRECATED; Use dagster project scaffold –excludes README.md instead) Create a folder structure with a single Dagster repository, in the current directory. This CLI helps you to scaffold a new Dagster repository within a folder structure that includes multiple Dagster repositories

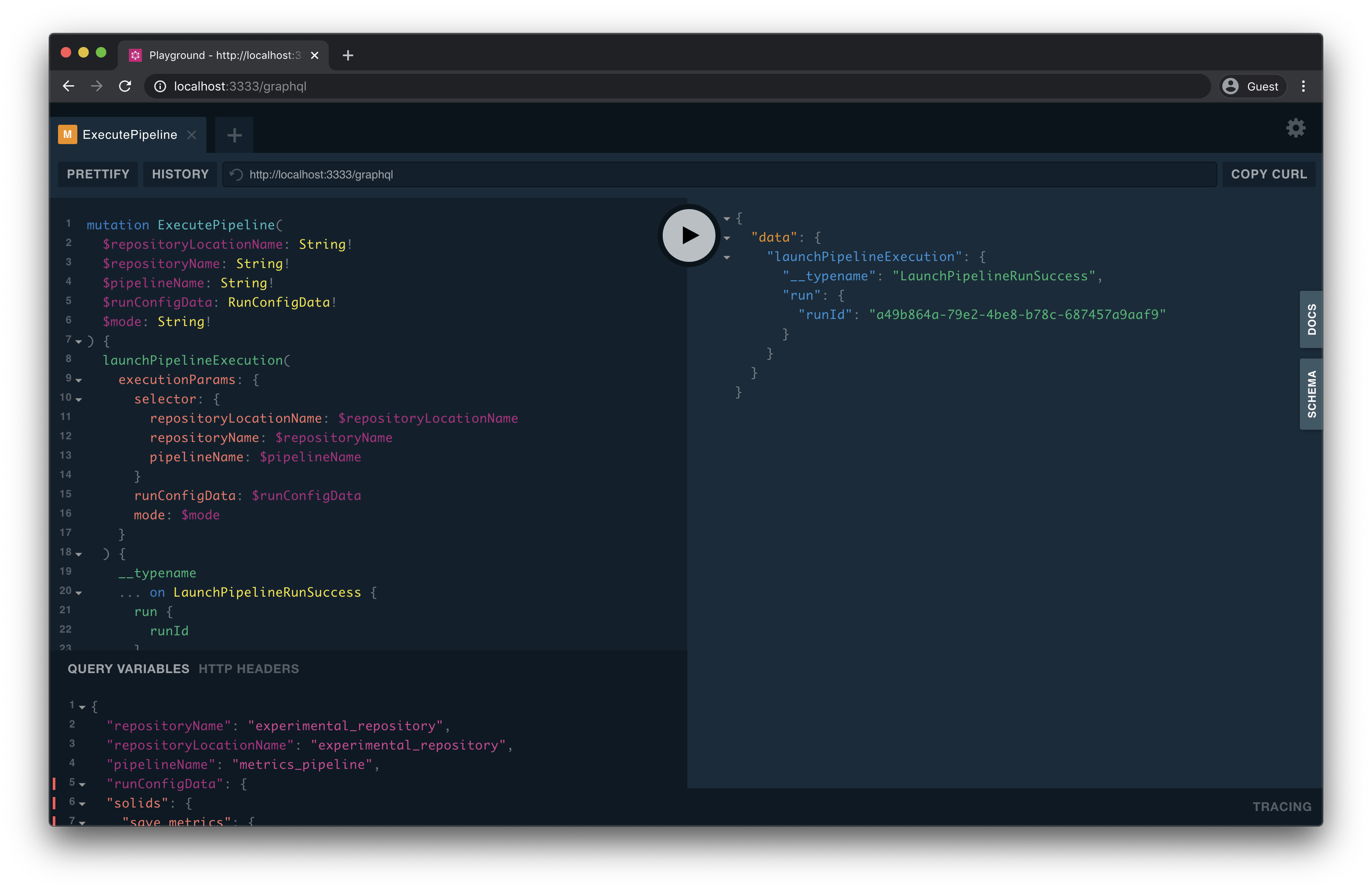

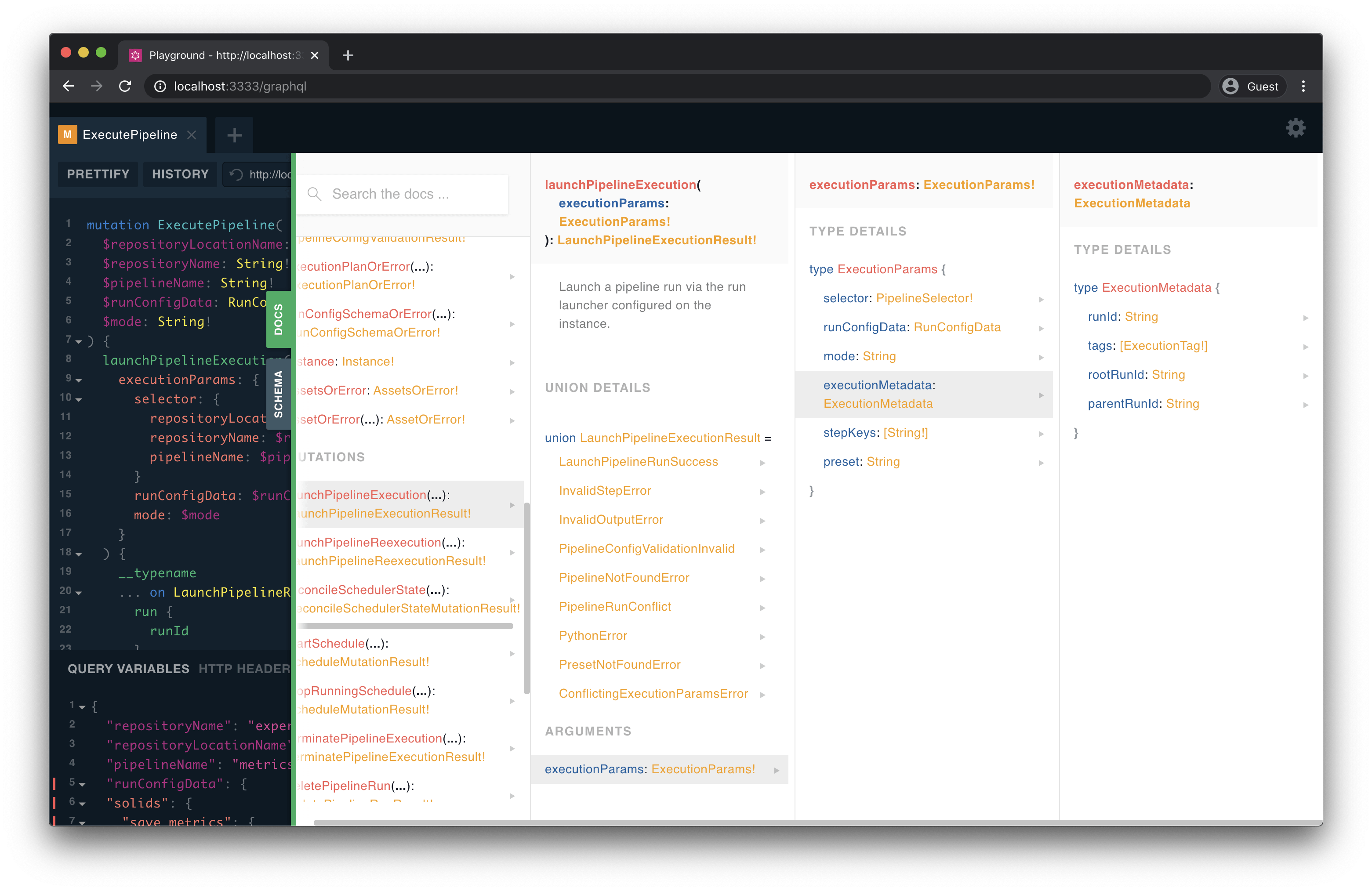

## dagster-graphql

Run a GraphQL query against the dagster interface to a specified repository or pipeline/job.

Can only use ONE of –workspace/-w, –python-file/-f, –module-name/-m, –grpc-port, –grpc-socket.

Examples:

1. dagster-graphql

2. dagster-graphql -w path/to/workspace.yaml

3. dagster-graphql -f path/to/file.py -a define_repo

4. dagster-graphql -m some_module -a define_repo

5. dagster-graphql -f path/to/file.py -a define_pipeline

6. dagster-graphql -m some_module -a define_pipeline

```shell

dagster-graphql [OPTIONS]

```

Options:

--version

Show the version and exit.

-t, --text \

GraphQL document to execute passed as a string

--file \

GraphQL document to execute passed as a file

-p, --predefined \

GraphQL document to execute, from a predefined set provided by dagster-graphql.

Options: launchPipelineExecution

-v, --variables \

A JSON encoded string containing the variables for GraphQL execution.

-r, --remote \

A URL for a remote instance running dagster-webserver to send the GraphQL request to.

-o, --output \

A file path to store the GraphQL response to. This flag is useful when making pipeline/job execution queries, since pipeline/job execution causes logs to print to stdout and stderr.

--ephemeral-instance

Use an ephemeral DagsterInstance instead of resolving via DAGSTER_HOME

--use-ssl

Use a secure channel when connecting to the gRPC server

--grpc-host \

Host to use to connect to gRPC server, defaults to localhost

--grpc-socket \

Named socket to use to connect to gRPC server

--grpc-port \

Port to use to connect to gRPC server

-a, --attribute \

Attribute that is either a 1) repository or job or 2) a function that returns a repository or job

--package-name \

Specify Python package where repository or job function lives

--autoload-defs-module-name \

A module to import and recursively search through for definitions.

-m, --module-name \

Specify module or modules (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each module as a code location in the current python environment.

-f, --python-file \

Specify python file or files (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each file as a code location in the current python environment.

-d, --working-directory \

Specify working directory to use when loading the repository or job

-w, --workspace \

Path to workspace file. Argument can be provided multiple times.

--empty-workspace

Allow an empty workspace

Environment variables:

DAGSTER_ATTRIBUTE

>

Provide a default for [`--attribute`](#cmdoption-dagster-graphql-a)

DAGSTER_PACKAGE_NAME

>

Provide a default for [`--package-name`](#cmdoption-dagster-graphql-package-name)

DAGSTER_autoload_defs_module_name

>

Provide a default for [`--autoload-defs-module-name`](#cmdoption-dagster-graphql-autoload-defs-module-name)

DAGSTER_MODULE_NAME

>

Provide a default for [`--module-name`](#cmdoption-dagster-graphql-m)

DAGSTER_PYTHON_FILE

>

Provide a default for [`--python-file`](#cmdoption-dagster-graphql-f)

DAGSTER_WORKING_DIRECTORY

>

Provide a default for [`--working-directory`](#cmdoption-dagster-graphql-d)

## dagster-webserver

Run dagster-webserver. Loads a code location.

Can only use ONE of –workspace/-w, –python-file/-f, –module-name/-m, –grpc-port, –grpc-socket.

Examples:

1. dagster-webserver (works if ./workspace.yaml exists)

2. dagster-webserver -w path/to/workspace.yaml

3. dagster-webserver -f path/to/file.py

4. dagster-webserver -f path/to/file.py -d path/to/working_directory

5. dagster-webserver -m some_module

6. dagster-webserver -f path/to/file.py -a define_repo

7. dagster-webserver -m some_module -a define_repo

8. dagster-webserver -p 3333

Options can also provide arguments via environment variables prefixed with DAGSTER_WEBSERVER.

For example, DAGSTER_WEBSERVER_PORT=3333 dagster-webserver

```shell

dagster-webserver [OPTIONS]

```

Options:

-h, --host \

Host to run server on

Default: `'127.0.0.1'`

-p, --port \

Port to run server on - defaults to 3000

-l, --path-prefix \

The path prefix where server will be hosted (eg: /dagster-webserver)

Default: `''`

--db-statement-timeout \

The timeout in milliseconds to set on database statements sent to the DagsterInstance. Not respected in all configurations.

Default: `15000`

--db-pool-recycle \

The maximum age of a connection to use from the sqlalchemy pool without connection recycling. Set to -1 to disable. Not respected in all configurations.

Default: `3600`

--db-pool-max-overflow \

The maximum overflow size of the sqlalchemy pool. Set to -1 to disable.Not respected in all configurations.

Default: `20`

--read-only

Start server in read-only mode, where all mutations such as launching runs and turning schedules on/off are turned off.

--suppress-warnings

Filter all warnings when hosting server.

--uvicorn-log-level, --log-level \

Set the log level for the uvicorn web server.

Default: `'warning'`Options: critical | error | warning | info | debug | trace

--dagster-log-level \

Set the log level for dagster log events.

Default: `'info'`Options: critical | error | warning | info | debug

--log-format \

Format of the log output from the webserver

Default: `'colored'`Options: colored | json | rich

--code-server-log-level \

Set the log level for any code servers spun up by the webserver.

Default: `'info'`Options: critical | error | warning | info | debug

--live-data-poll-rate \

Rate at which the dagster UI polls for updated asset data (in milliseconds)

Default: `2000`

--version

Show the version and exit.

--use-ssl

Use a secure channel when connecting to the gRPC server

--grpc-host \

Host to use to connect to gRPC server, defaults to localhost

--grpc-socket \

Named socket to use to connect to gRPC server

--grpc-port \

Port to use to connect to gRPC server

-a, --attribute \

Attribute that is either a 1) repository or job or 2) a function that returns a repository or job

--package-name \

Specify Python package where repository or job function lives

--autoload-defs-module-name \

A module to import and recursively search through for definitions.

-m, --module-name \

Specify module or modules (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each module as a code location in the current python environment.

-f, --python-file \

Specify python file or files (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each file as a code location in the current python environment.

-d, --working-directory \

Specify working directory to use when loading the repository or job

-w, --workspace \

Path to workspace file. Argument can be provided multiple times.

--empty-workspace

Allow an empty workspace

Environment variables:

DAGSTER_WEBSERVER_LOG_LEVEL

>

Provide a default for [`--dagster-log-level`](#cmdoption-dagster-webserver-dagster-log-level)

DAGSTER_ATTRIBUTE

>

Provide a default for [`--attribute`](#cmdoption-dagster-webserver-a)

DAGSTER_PACKAGE_NAME

>

Provide a default for [`--package-name`](#cmdoption-dagster-webserver-package-name)

DAGSTER_autoload_defs_module_name

>

Provide a default for [`--autoload-defs-module-name`](#cmdoption-dagster-webserver-autoload-defs-module-name)

DAGSTER_MODULE_NAME

>

Provide a default for [`--module-name`](#cmdoption-dagster-webserver-m)

DAGSTER_PYTHON_FILE

>

Provide a default for [`--python-file`](#cmdoption-dagster-webserver-f)

DAGSTER_WORKING_DIRECTORY

>

Provide a default for [`--working-directory`](#cmdoption-dagster-webserver-d)

## dagster-daemon run

Run any daemons configured on the DagsterInstance.

```shell

dagster-daemon run [OPTIONS]

```

Options:

--code-server-log-level \

Set the log level for any code servers spun up by the daemon.

Default: `'warning'`Options: critical | error | warning | info | debug

--log-level \

Set the log level for any code servers spun up by the daemon.

Default: `'info'`Options: critical | error | warning | info | debug

--log-format \

Format of the log output from the webserver

Default: `'colored'`Options: colored | json | rich

--use-ssl

Use a secure channel when connecting to the gRPC server

--grpc-host \

Host to use to connect to gRPC server, defaults to localhost

--grpc-socket \

Named socket to use to connect to gRPC server

--grpc-port \

Port to use to connect to gRPC server

-a, --attribute \

Attribute that is either a 1) repository or job or 2) a function that returns a repository or job

--package-name \

Specify Python package where repository or job function lives

--autoload-defs-module-name \

A module to import and recursively search through for definitions.

-m, --module-name \

Specify module or modules (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each module as a code location in the current python environment.

-f, --python-file \

Specify python file or files (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each file as a code location in the current python environment.

-d, --working-directory \

Specify working directory to use when loading the repository or job

-w, --workspace \

Path to workspace file. Argument can be provided multiple times.

--empty-workspace

Allow an empty workspace

Environment variables:

DAGSTER_DAEMON_LOG_LEVEL

>

Provide a default for [`--log-level`](#cmdoption-dagster-daemon-run-log-level)

DAGSTER_ATTRIBUTE

>

Provide a default for [`--attribute`](#cmdoption-dagster-daemon-run-a)

DAGSTER_PACKAGE_NAME

>

Provide a default for [`--package-name`](#cmdoption-dagster-daemon-run-package-name)

DAGSTER_autoload_defs_module_name

>

Provide a default for [`--autoload-defs-module-name`](#cmdoption-dagster-daemon-run-autoload-defs-module-name)

DAGSTER_MODULE_NAME

>

Provide a default for [`--module-name`](#cmdoption-dagster-daemon-run-m)

DAGSTER_PYTHON_FILE

>

Provide a default for [`--python-file`](#cmdoption-dagster-daemon-run-f)

DAGSTER_WORKING_DIRECTORY

>

Provide a default for [`--working-directory`](#cmdoption-dagster-daemon-run-d)

## dagster-daemon wipe

Wipe all heartbeats from storage.

```shell

dagster-daemon wipe [OPTIONS]

```

## dagster api grpc

Serve the Dagster inter-process API over GRPC

```shell

dagster api grpc [OPTIONS]

```

Options:

-p, --port \

Port over which to serve. You must pass one and only one of –port/-p or –socket/-s.

-s, --socket \

Serve over a UDS socket. You must pass one and only one of –port/-p or –socket/-s.

-h, --host \

Hostname at which to serve. Default is localhost.

-n, --max-workers, --max_workers \

Maximum number of (threaded) workers to use in the GRPC server

--heartbeat

If set, the GRPC server will shut itself down when it fails to receive a heartbeat after a timeout configurable with –heartbeat-timeout.

--heartbeat-timeout \

Timeout after which to shutdown if –heartbeat is set and a heartbeat is not received

--lazy-load-user-code

Wait until the first LoadRepositories call to actually load the repositories, instead of waiting to load them when the server is launched. Useful for surfacing errors when the server is managed directly from the Dagster UI.

--use-python-environment-entry-point

If this flag is set, the server will signal to clients that they should launch dagster commands using \ -m dagster, instead of the default dagster entry point. This is useful when there are multiple Python environments running in the same machine, so a single dagster entry point is not enough to uniquely determine the environment.

--empty-working-directory

Indicates that the working directory should be empty and should not set to the current directory as a default

--fixed-server-id \

[INTERNAL] This option should generally not be used by users. Internal param used by dagster to spawn a gRPC server with the specified server id.

--log-level \

Level at which to log output from the code server process

Default: `'info'`Options: critical | error | warning | info | debug

--log-format \

Format of the log output from the code server process

Default: `'colored'`Options: colored | json | rich

--container-image \

Container image to use to run code from this server.

--container-context \

Serialized JSON with configuration for any containers created to run the code from this server.

--inject-env-vars-from-instance

Whether to load env vars from the instance and inject them into the environment.

--location-name \

Name of the code location this server corresponds to.

--instance-ref \

[INTERNAL] Serialized InstanceRef to use for accessing the instance

--enable-metrics

[INTERNAL] Retrieves current utilization metrics from GRPC server.

--defs-state-info \

[INTERNAL] Serialized DefsStateInfo to use for the server.

-a, --attribute \

Attribute that is either a 1) repository or job or 2) a function that returns a repository or job

--package-name \

Specify Python package where repository or job function lives

--autoload-defs-module-name \

A module to import and recursively search through for definitions.

-m, --module-name \

Specify module where dagster definitions reside as top-level symbols/variables and load the module as a code location in the current python environment.

-f, --python-file \

Specify python file where dagster definitions reside as top-level symbols/variables and load the file as a code location in the current python environment.

-d, --working-directory \

Specify working directory to use when loading the repository or job

Environment variables:

DAGSTER_GRPC_PORT

>

Provide a default for [`--port`](#cmdoption-dagster-api-grpc-p)

DAGSTER_GRPC_SOCKET

>

Provide a default for [`--socket`](#cmdoption-dagster-api-grpc-s)

DAGSTER_GRPC_HOST

>

Provide a default for [`--host`](#cmdoption-dagster-api-grpc-h)

DAGSTER_GRPC_MAX_WORKERS

>

Provide a default for [`--max-workers`](#cmdoption-dagster-api-grpc-n)

DAGSTER_LAZY_LOAD_USER_CODE

>

Provide a default for [`--lazy-load-user-code`](#cmdoption-dagster-api-grpc-lazy-load-user-code)

DAGSTER_USE_PYTHON_ENVIRONMENT_ENTRY_POINT

>

Provide a default for [`--use-python-environment-entry-point`](#cmdoption-dagster-api-grpc-use-python-environment-entry-point)

DAGSTER_EMPTY_WORKING_DIRECTORY

>

Provide a default for [`--empty-working-directory`](#cmdoption-dagster-api-grpc-empty-working-directory)

DAGSTER_CONTAINER_IMAGE

>

Provide a default for [`--container-image`](#cmdoption-dagster-api-grpc-container-image)

DAGSTER_CONTAINER_CONTEXT

>

Provide a default for [`--container-context`](#cmdoption-dagster-api-grpc-container-context)

DAGSTER_INJECT_ENV_VARS_FROM_INSTANCE

>

Provide a default for [`--inject-env-vars-from-instance`](#cmdoption-dagster-api-grpc-inject-env-vars-from-instance)

DAGSTER_LOCATION_NAME

>

Provide a default for [`--location-name`](#cmdoption-dagster-api-grpc-location-name)

DAGSTER_INSTANCE_REF

>

Provide a default for [`--instance-ref`](#cmdoption-dagster-api-grpc-instance-ref)

DAGSTER_ENABLE_SERVER_METRICS

>

Provide a default for [`--enable-metrics`](#cmdoption-dagster-api-grpc-enable-metrics)

DAGSTER_ATTRIBUTE

>

Provide a default for [`--attribute`](#cmdoption-dagster-api-grpc-a)

DAGSTER_PACKAGE_NAME

>

Provide a default for [`--package-name`](#cmdoption-dagster-api-grpc-package-name)

DAGSTER_autoload_defs_module_name

>

Provide a default for [`--autoload-defs-module-name`](#cmdoption-dagster-api-grpc-autoload-defs-module-name)

DAGSTER_MODULE_NAME

>

Provide a default for [`--module-name`](#cmdoption-dagster-api-grpc-m)

DAGSTER_PYTHON_FILE

>

Provide a default for [`--python-file`](#cmdoption-dagster-api-grpc-f)

DAGSTER_WORKING_DIRECTORY

>

Provide a default for [`--working-directory`](#cmdoption-dagster-api-grpc-d)

---

---

title: 'create-dagster cli'

title_meta: 'create-dagster cli API Documentation - Build Better Data Pipelines | Python Reference Documentation for Dagster'

description: 'create-dagster cli Dagster API | Comprehensive Python API documentation for Dagster, the data orchestration platform. Learn how to build, test, and maintain data pipelines with our detailed guides and examples.'

last_update:

date: '2025-12-10'

custom_edit_url: null

---

# create-dagster CLI

## Installation

See the [Installation](https://docs.dagster.io/getting-started/installation) guide.

## Commands

### create-dagster project

Scaffold a new Dagster project at PATH. The name of the project will be the final component of PATH.

This command can be run inside or outside of a workspace directory. If run inside a workspace,

the project will be added to the workspace’s list of project specs.

“.” may be passed as PATH to create the new project inside the existing working directory.

Created projects will have the following structure:

```default

├── src

│ └── PROJECT_NAME

│ ├── __init__.py

│ ├── definitions.py

│ ├── defs

│ │ └── __init__.py

│ └── components

│ └── __init__.py

├── tests

│ └── __init__.py

└── pyproject.toml

```

The src.PROJECT_NAME.defs directory holds Python objects that can be targeted by the

dg scaffold command or have dg-inspectable metadata. Custom component types in the project

live in src.PROJECT_NAME.components. These types can be created with dg scaffold component.

Examples:

```default

create-dagster project PROJECT_NAME

Scaffold a new project in new directory PROJECT_NAME. Automatically creates directory

and parent directories.

create-dagster project .

Scaffold a new project in the CWD. The project name is taken from the last component of the CWD.

```

```shell

create-dagster project [OPTIONS] PATH

```

Options:

--uv-sync, --no-uv-sync

Preemptively answer the “Run uv sync?” prompt presented after project initialization.

--use-editable-dagster

Install all Dagster package dependencies from a local Dagster clone. The location of the local Dagster clone will be read from the DAGSTER_GIT_REPO_DIR environment variable.

--verbose

Enable verbose output for debugging.

Arguments:

PATH

Required argument

### create-dagster workspace

Initialize a new Dagster workspace.

The scaffolded workspace folder has the following structure:

```default

├── projects

│ └── Dagster projects go here

├── deployments

│ └── local

│ ├── pyproject.toml

│ └── uv.lock

└── dg.toml

```

Examples:

```default

create-dagster workspace WORKSPACE_NAME

Scaffold a new workspace in new directory WORKSPACE_NAME. Automatically creates directory

and parent directories.

create-dagster workspace .

Scaffold a new workspace in the CWD. The workspace name is the last component of the CWD.

```

```shell

create-dagster workspace [OPTIONS] PATH

```

Options:

--uv-sync, --no-uv-sync

Preemptively answer the “Run uv sync?” prompt presented after project initialization.

--use-editable-dagster

Install all Dagster package dependencies from a local Dagster clone. The location of the local Dagster clone will be read from the DAGSTER_GIT_REPO_DIR environment variable.

--verbose

Enable verbose output for debugging.

Arguments:

PATH

Required argument

---

---

title: 'dg api reference'

title_meta: 'dg api reference API Documentation - Build Better Data Pipelines | Python Reference Documentation for Dagster'

description: 'dg api reference Dagster API | Comprehensive Python API documentation for Dagster, the data orchestration platform. Learn how to build, test, and maintain data pipelines with our detailed guides and examples.'

last_update:

date: '2025-12-10'

custom_edit_url: null

---

# dg api reference

## dg api

Make REST-like API calls to Dagster Plus.

```shell

dg api [OPTIONS] COMMAND [ARGS]...

```

### agent

Manage agents in Dagster Plus.

```shell

dg api agent [OPTIONS] COMMAND [ARGS]...

```

#### get

Get detailed information about a specific agent.

```shell

dg api agent get [OPTIONS] AGENT_ID

```

Options:

--json

Output in JSON format for machine readability

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Arguments:

AGENT_ID

Required argument

Environment variables:

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-agent-get-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-agent-get-api-token)

#### list

List all agents in the organization.

```shell

dg api agent list [OPTIONS]

```

Options:

--json

Output in JSON format for machine readability

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Environment variables:

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-agent-list-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-agent-list-api-token)

### asset

Manage assets in Dagster Plus.

```shell

dg api asset [OPTIONS] COMMAND [ARGS]...

```

#### get

Get specific asset details.

```shell

dg api asset get [OPTIONS] ASSET_KEY

```

Options:

--view \

View type: ‘status’ for health and runtime information

Options: status

--json

Output in JSON format for machine readability

-d, --deployment \

Deployment to target.

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Arguments:

ASSET_KEY

Required argument

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-api-asset-get-d)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-asset-get-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-asset-get-api-token)

#### list

List assets with pagination.

```shell

dg api asset list [OPTIONS]

```

Options:

--limit \

Number of assets to return (default: 50, max: 1000)

--cursor \

Cursor for pagination

--view \

View type: ‘status’ for health and runtime information

Options: status

--json

Output in JSON format for machine readability

-d, --deployment \

Deployment to target.

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-api-asset-list-d)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-asset-list-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-asset-list-api-token)

### deployment

Manage deployments in Dagster Plus.

```shell

dg api deployment [OPTIONS] COMMAND [ARGS]...

```

#### get

Show detailed information about a specific deployment.

```shell

dg api deployment get [OPTIONS] NAME

```

Options:

--json

Output in JSON format for machine readability

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Arguments:

NAME

Required argument

Environment variables:

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-deployment-get-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-deployment-get-api-token)

#### list

List all deployments in the organization.

```shell

dg api deployment list [OPTIONS]

```

Options:

--json

Output in JSON format for machine readability

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Environment variables:

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-deployment-list-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-deployment-list-api-token)

### log

Retrieve logs from Dagster Plus runs.

```shell

dg api log [OPTIONS] COMMAND [ARGS]...

```

#### get

Get logs for a specific run ID.

```shell

dg api log get [OPTIONS] RUN_ID

```

Options:

--level \

Filter by log level (DEBUG, INFO, WARNING, ERROR, CRITICAL)

--step \

Filter by step key (partial matching)

--limit \

Maximum number of log entries to return

--cursor \

Pagination cursor for retrieving more logs

--json

Output in JSON format for machine readability

-d, --deployment \

Deployment to target.

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Arguments:

RUN_ID

Required argument

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-api-log-get-d)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-log-get-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-log-get-api-token)

### run

Manage runs in Dagster Plus.

```shell

dg api run [OPTIONS] COMMAND [ARGS]...

```

#### get

Get run metadata by ID.

```shell

dg api run get [OPTIONS] RUN_ID

```

Options:

--json

Output in JSON format for machine readability

-d, --deployment \

Deployment to target.

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Arguments:

RUN_ID

Required argument

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-api-run-get-d)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-run-get-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-run-get-api-token)

### run-events

Manage run events in Dagster Plus.

```shell

dg api run-events [OPTIONS] COMMAND [ARGS]...

```

#### get

Get run events with filtering options.

```shell

dg api run-events get [OPTIONS] RUN_ID

```

Options:

--type \

Filter by event type (comma-separated)

--step \

Filter by step key (partial matching)

--limit \

Maximum number of events to return

--json

Output in JSON format for machine readability

-d, --deployment \

Deployment to target.

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Arguments:

RUN_ID

Required argument

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-api-run-events-get-d)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-run-events-get-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-run-events-get-api-token)

### schedule

Manage schedules in Dagster Plus.

```shell

dg api schedule [OPTIONS] COMMAND [ARGS]...

```

#### get

Get specific schedule details.

```shell

dg api schedule get [OPTIONS] SCHEDULE_NAME

```

Options:

--json

Output in JSON format for machine readability

-d, --deployment \

Deployment to target.

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Arguments:

SCHEDULE_NAME

Required argument

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-api-schedule-get-d)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-schedule-get-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-schedule-get-api-token)

#### list

List schedules in the deployment.

```shell

dg api schedule list [OPTIONS]

```

Options:

--status \

Filter schedules by status

Options: RUNNING | STOPPED

--json

Output in JSON format for machine readability

-d, --deployment \

Deployment to target.

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-api-schedule-list-d)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-schedule-list-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-schedule-list-api-token)

### secret

Manage secrets in Dagster Plus.

Secrets are environment variables that are encrypted and securely stored

in Dagster Plus. They can be scoped to different deployment levels and

code locations.

Security Note: Secret values are hidden by default. Use appropriate flags

and caution when displaying sensitive values.

```shell

dg api secret [OPTIONS] COMMAND [ARGS]...

```

#### get

Get details for a specific secret.

By default, the secret value is not shown for security reasons.

Use –show-value flag to display the actual secret value.

WARNING: When using –show-value, the secret will be visible in your terminal

and may be stored in shell history. Use with caution.

```shell

dg api secret get [OPTIONS] SECRET_NAME

```

Options:

--location \

Filter by code location name

--show-value

Include secret value in output (use with caution - values will be visible in terminal)

--json

Output in JSON format for machine readability

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Arguments:

SECRET_NAME

Required argument

Environment variables:

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-secret-get-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-secret-get-api-token)

#### list

List secrets in the organization.

By default, secret values are not shown for security reasons.

Use ‘dg api secret get NAME –show-value’ to view specific values.

```shell

dg api secret list [OPTIONS]

```

Options:

--location \

Filter secrets by code location name

--scope \

Filter secrets by scope

Options: deployment | organization

--json

Output in JSON format for machine readability

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Environment variables:

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-secret-list-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-secret-list-api-token)

### sensor

Manage sensors in Dagster Plus.

```shell

dg api sensor [OPTIONS] COMMAND [ARGS]...

```

#### get

Get specific sensor details.

```shell

dg api sensor get [OPTIONS] SENSOR_NAME

```

Options:

--json

Output in JSON format for machine readability

-d, --deployment \

Deployment to target.

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Arguments:

SENSOR_NAME

Required argument

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-api-sensor-get-d)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-sensor-get-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-sensor-get-api-token)

#### list

List sensors in the deployment.

```shell

dg api sensor list [OPTIONS]

```

Options:

--status \

Filter sensors by status

Options: RUNNING | STOPPED | PAUSED

--json

Output in JSON format for machine readability

-d, --deployment \

Deployment to target.

-o, --organization \

Organization to target.

--api-token \

Dagster Cloud API token.

--view-graphql

Print GraphQL queries and responses to stderr for debugging.

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-api-sensor-list-d)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-api-sensor-list-o)

DAGSTER_CLOUD_API_TOKEN

>

Provide a default for [`--api-token`](#cmdoption-dg-api-sensor-list-api-token)

---

---

description: Configure dg from both configuration files and the command line.

title: dg cli configuration

---

`dg` can be configured from both configuration files and the command line.

There are three kinds of settings:

- Application-level settings configure the `dg` application as a whole. They can be set

in configuration files or on the command line, where they are listed as

"global options" in the `dg --help` text.

- Project-level settings configure a `dg` project. They can only be

set in the configuration file for a project.

- Workspace-level settings configure a `dg` workspace. They can only

be set in the configuration file for a workspace.

:::tip

The application-level settings used in any given invocation of `dg` are the

result of merging settings from one or more configuration files and the command

line. The order of precedence is:

```

user config file < project/workspace config file < command line

```

Note that project and workspace config files are combined above. This is

because, when projects are inside a workspace, application-level settings are

sourced from the workspace configuration file and disallowed in the constituent

project configuration files. In other words, application-level settings are

only allowed in project configuration files if the project is not inside a

workspace.

:::

## Configuration files

There are three kinds of `dg` configuration files: user, project, and workspace.

- [User configuration files](#user-configuration-file) are optional and contain only application-level settings. They are located in a platform-specific location, `~/.config/dg.toml` (Unix) or `%APPDATA%/dg/dg.toml` (Windows).

- [Project configuration files](#project-configuration-file) are required to mark a directory as a `dg` project. They are located in the root of a `dg` project and contain project-specific settings. They may also contain application-level settings if the project is not inside a workspace.

- [Workspace configuration files](#workspace-configuration-file) are required to mark a directory as a `dg` workspace. They are located in the root of a `dg` workspace and contain workspace-specific settings. They may also contain application-level settings. When projects are inside a workspace, the application-level settings of the workspace apply to all contained projects as well.

When `dg` is launched, it will attempt to discover all three configuration files by looking up the directory hierarchy from the CWD (and in the dedicated location for user configuration files). Many commands require a project or workspace to be in scope. If the corresponding configuration file is not found when launching such a command, `dg` will raise an error.

### User configuration file

A user configuration file can be placed at `~/.config/dg.toml` (Unix) or

`%APPDATA%/dg/dg.toml` (Windows).

Below is an example of a user configuration file. The `cli` section contains

application-level settings and is the only permitted section. The settings

listed in the below sample are comprehensive:

### Project configuration file

A project configuration file is located in the root of a `dg` project. It may

either be a `pyproject.toml` file or a `dg.toml` file. If it is a

`pyproject.toml`, then all settings are nested under the `tool.dg` key. If it

is a `dg.toml` file, then settings should be placed at the top level. Usually

`pyproject.toml` is used for project configuration.

Below is an example of the dg-scoped part of a `pyproject.toml` (note all settings are part of `tool.dg.*` tables) for a project named `my-project`. The `tool.dg.project` section is a comprehensive list of supported settings:

### Workspace configuration file

A workspace configuration file is located in the root of a `dg` workspace. It

may either be a `pyproject.toml` file or a `dg.toml` file. If it is a `pyproject.toml`,

then all settings are nested under the `tool.dg` key. If it is a `dg.toml` file,

then all settings are top-level keys. Usually `dg.toml` is used for workspace

configuration.

Below is an example of a `dg.toml` file for a workspace. The

`workspace` section is a comprehensive list of supported settings:

---

---

title: 'dg cli local build command reference'

title_meta: 'dg cli local build command reference API Documentation - Build Better Data Pipelines | Python Reference Documentation for Dagster'

description: 'dg cli local build command reference Dagster API | Comprehensive Python API documentation for Dagster, the data orchestration platform. Learn how to build, test, and maintain data pipelines with our detailed guides and examples.'

last_update:

date: '2025-12-10'

custom_edit_url: null

---

# dg CLI local build command reference

`dg` commands for scaffolding, checking, and listing Dagster entities, and running pipelines in a local Dagster instance.

Scaffolds a Dockerfile to build the given Dagster project or workspace.

>

NOTE: This command is maintained for backward compatibility.

Consider using dg plus deploy configure [serverless|hybrid] instead for a complete

deployment setup including CI/CD configuration.

component

Scaffold of a custom Dagster component type.

>

This command must be run inside a Dagster project directory. The component type scaffold

will be placed in submodule \.lib.\.

defs

Commands for scaffolding Dagster code.

github-actions

Scaffold a GitHub Actions workflow for a Dagster project.

>

This command will create a GitHub Actions workflow in the .github/workflows directory.

NOTE: This command is maintained for backward compatibility.

Consider using dg plus deploy configure [serverless|hybrid] –git-provider github

instead for a complete deployment setup.

## dg dev

Start a local instance of Dagster.

If run inside a workspace directory, this command will launch all projects in the

workspace. If launched inside a project directory, it will launch only that project.

```shell

dg dev [OPTIONS]

```

Options:

--code-server-log-level \

Set the log level for code servers spun up by dagster services.

Default: `'warning'`Options: critical | error | warning | info | debug

--log-level \

Set the log level for dagster services.

Default: `'info'`Options: critical | error | warning | info | debug

--log-format \

Format of the logs for dagster services

Default: `'colored'`Options: colored | json | rich

-p, --port \

Port to use for the Dagster webserver.

-h, --host \

Host to use for the Dagster webserver.

--live-data-poll-rate \

Rate at which the dagster UI polls for updated asset data (in milliseconds)

Default: `2000`

--check-yaml, --no-check-yaml

Whether to schema-check defs.yaml files for the project before starting the dev server.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

--verbose

Enable verbose output for debugging.

--use-active-venv

Use the active virtual environment as defined by $VIRTUAL_ENV for all projects instead of attempting to resolve individual project virtual environments.

--autoload-defs-module-name \

A module to import and recursively search through for definitions.

-m, --module-name \

Specify module or modules (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each module as a code location in the current python environment.

-f, --python-file \

Specify python file or files (flag can be used multiple times) where dagster definitions reside as top-level symbols/variables and load each file as a code location in the current python environment.

-d, --working-directory \

Specify working directory to use when loading the repository or job

-w, --workspace \

Path to workspace file. Argument can be provided multiple times.

--empty-workspace

Allow an empty workspace

Environment variables:

DAGSTER_autoload_defs_module_name

>

Provide a default for [`--autoload-defs-module-name`](#cmdoption-dg-dev-autoload-defs-module-name)

DAGSTER_MODULE_NAME

>

Provide a default for [`--module-name`](#cmdoption-dg-dev-m)

DAGSTER_PYTHON_FILE

>

Provide a default for [`--python-file`](#cmdoption-dg-dev-f)

DAGSTER_WORKING_DIRECTORY

>

Provide a default for [`--working-directory`](#cmdoption-dg-dev-d)

## dg check

Commands for checking the integrity of your Dagster code.

```shell

dg check [OPTIONS] COMMAND [ARGS]...

```

### defs

Loads and validates your Dagster definitions using a Dagster instance.

If run inside a deployment directory, this command will launch all code locations in the

deployment. If launched inside a code location directory, it will launch only that code

location.

When running, this command sets the environment variable DAGSTER_IS_DEFS_VALIDATION_CLI=1.

This environment variable can be used to control the behavior of your code in validation mode.

This command returns an exit code 1 when errors are found, otherwise an exit code 0.

```shell

dg check defs [OPTIONS]

```

Options:

--log-level \

Set the log level for dagster services.

Default: `'warning'`Options: critical | error | warning | info | debug

--log-format \

Format of the logs for dagster services

Default: `'colored'`Options: colored | json | rich

--check-yaml, --no-check-yaml

Whether to schema-check defs.yaml files for the project before loading and checking all definitions.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

--verbose

Enable verbose output for debugging.

--use-active-venv

Use the active virtual environment as defined by $VIRTUAL_ENV for all projects instead of attempting to resolve individual project virtual environments.

Validate environment variables in requirements for all components in the given module.

--verbose

Enable verbose output for debugging.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

Arguments:

PATHS

Optional argument(s)

## dg list

Commands for listing Dagster entities.

```shell

dg list [OPTIONS] COMMAND [ARGS]...

```

### component-tree

```shell

dg list component-tree [OPTIONS]

```

Options:

--output-file \

Write to file instead of stdout. If not specified, will write to stdout.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

--verbose

Enable verbose output for debugging.

### components

List all available Dagster component types in the current Python environment.

```shell

dg list components [OPTIONS]

```

Options:

-p, --package \

Filter by package name.

--json

Output as JSON instead of a table.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

--verbose

Enable verbose output for debugging.

### defs

List registered Dagster definitions in the current project environment.

```shell

dg list defs [OPTIONS]

```

Options:

--json

Output as JSON instead of a table.

-p, --path \

Path to the definitions to list.

-a, --assets \

Asset selection to list.

-c, --columns \

Columns to display. Either a comma-separated list of column names, or multiple invocations of the flag. Available columns: key, group, deps, kinds, description, tags, cron, is_executable

--verbose

Enable verbose output for debugging.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

### envs

List environment variables from the .env file of the current project.

```shell

dg list envs [OPTIONS]

```

Options:

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

--verbose

Enable verbose output for debugging.

### projects

List projects in the current workspace or emit the current project directory.

```shell

dg list projects [OPTIONS]

```

Options:

--verbose

Enable verbose output for debugging.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

### registry-modules

List dg plugins and their corresponding objects in the current Python environment.

```shell

dg list registry-modules [OPTIONS]

```

Options:

--json

Output as JSON instead of a table.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

JSON string of config to use for the launched run.

-c, --config \

Specify one or more run config files. These can also be file patterns. If more than one run config file is captured then those files are merged. Files listed first take precedence.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

--verbose

Enable verbose output for debugging.

-a, --attribute \

Attribute that is either a 1) repository or job or 2) a function that returns a repository or job

--package-name \

Specify Python package where repository or job function lives

--autoload-defs-module-name \

A module to import and recursively search through for definitions.

-m, --module-name \

Specify module where dagster definitions reside as top-level symbols/variables and load the module as a code location in the current python environment.

-f, --python-file \

Specify python file where dagster definitions reside as top-level symbols/variables and load the file as a code location in the current python environment.

-d, --working-directory \

Specify working directory to use when loading the repository or job

Environment variables:

DAGSTER_ATTRIBUTE

>

Provide a default for [`--attribute`](#cmdoption-dg-launch-a)

DAGSTER_PACKAGE_NAME

>

Provide a default for [`--package-name`](#cmdoption-dg-launch-package-name)

DAGSTER_autoload_defs_module_name

>

Provide a default for [`--autoload-defs-module-name`](#cmdoption-dg-launch-autoload-defs-module-name)

DAGSTER_MODULE_NAME

>

Provide a default for [`--module-name`](#cmdoption-dg-launch-m)

DAGSTER_PYTHON_FILE

>

Provide a default for [`--python-file`](#cmdoption-dg-launch-f)

DAGSTER_WORKING_DIRECTORY

>

Provide a default for [`--working-directory`](#cmdoption-dg-launch-d)

## dg scaffold defs example

Note: Before scaffolding definitions with `dg`, you must [create a project](https://docs.dagster.io/guides/build/projects/creating-a-new-project) with the [create-dagster CLI](https://docs.dagster.io/api/clis/create-dagster) and activate its virtual environment.

You can use the `dg scaffold defs` command to scaffold a new asset underneath the `defs` folder. In this example, we scaffold an asset named `my_asset.py` and write it to the `defs/assets` directory:

```bash

dg scaffold defs dagster.asset assets/my_asset.py

Creating a component at /.../my-project/src/my_project/defs/assets/my_asset.py.

```

Once the asset has been scaffolded, we can see that a new file has been added to `defs/assets`, and view its contents:

```bash

tree

.

├── pyproject.toml

├── src

│ └── my_project

│ ├── __init__.py

│ └── defs

│ ├── __init__.py

│ └── assets

│ └── my_asset.py

├── tests

│ └── __init__.py

└── uv.lock

```

```python

cat src/my_project/defs/assets/my_asset.py

import dagster as dg

@dg.asset

def my_asset(context: dg.AssetExecutionContext) -> dg.MaterializeResult: ...

```

Note: You can run `dg scaffold defs` from within any directory in your project and the resulting files will always be created in the `/src//defs/` folder.

In the above example, the scaffolded asset contains a basic commented-out definition. You can replace this definition with working code:

```python

import dagster as dg

@dg.asset(group_name="my_group")

def my_asset(context: dg.AssetExecutionContext) -> None:

"""Asset that greets you."""

context.log.info("hi!")

```

To confirm that the new asset now appears in the list of definitions, run dg list defs:

```bash

dg list defs

┏━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Section ┃ Definitions ┃

┡━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┩

│ Assets │ ┏━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━┳━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┓ │

│ │ ┃ Key ┃ Group ┃ Deps ┃ Kinds ┃ Description ┃ │

│ │ ┡━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━╇━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━┩ │

│ │ │ my_asset │ my_group │ │ │ Asset that greets you. │ │

│ │ └──────────┴──────────┴──────┴───────┴────────────────────────┘ │

└─────────┴─────────────────────────────────────────────────────────────────┘

```

---

---

title: 'dg plus reference'

title_meta: 'dg plus reference API Documentation - Build Better Data Pipelines | Python Reference Documentation for Dagster'

description: 'dg plus reference Dagster API | Comprehensive Python API documentation for Dagster, the data orchestration platform. Learn how to build, test, and maintain data pipelines with our detailed guides and examples.'

last_update:

date: '2025-12-10'

custom_edit_url: null

---

# dg plus reference

## dg plus

Commands for interacting with Dagster Plus.

```shell

dg plus [OPTIONS] COMMAND [ARGS]...

```

### create

Commands for creating configuration in Dagster Plus.

```shell

dg plus create [OPTIONS] COMMAND [ARGS]...

```

#### ci-api-token

Create a Dagster Plus API token for CI.

```shell

dg plus create ci-api-token [OPTIONS]

```

Options:

--description \

Description for the token

--verbose

Enable verbose output for debugging.

#### env

Create or update an environment variable in Dagster Plus.

```shell

dg plus create env [OPTIONS] ENV_NAME [ENV_VALUE]

```

Options:

--from-local-env

Pull the environment variable value from your shell environment or project .env file.

--scope \

The deployment scope to set the environment variable in. Defaults to all scopes.

Options: full | branch | local

--global

Whether to set the environment variable at the deployment level, for all locations.

-y, --yes

Do not confirm the creation of the environment variable, if it already exists.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

--verbose

Enable verbose output for debugging.

Arguments:

ENV_NAME

Required argument

ENV_VALUE

Optional argument

### deploy

Deploy a project or workspace to Dagster Plus. Handles all state management for the deploy

session, building and pushing a new code artifact for each project.

To run a full end-to-end deploy, run dg plus deploy. This will start a new session, build

and push the image for the project or workspace, and inform Dagster+ to deploy the newly built

code.

Each of the individual stages of the deploy is also available as its own subcommand for additional

customization.

```shell

dg plus deploy [OPTIONS] COMMAND [ARGS]...

```

Options:

--deployment \

Name of the Dagster+ deployment to which to deploy (or use as the base deployment if deploying to a branch deployment). If not set, defaults to the value set by dg plus login.

Default: `'deployment'`

--organization \

Dagster+ organization to which to deploy. If not set, defaults to the value set by dg plus login.

Default: `'organization'`

--python-version \

Python version used to deploy the project. If not set, defaults to the calling process’s Python minor version.

Options: 3.9 | 3.10 | 3.11 | 3.12

--deployment-type \

Whether to deploy to a full deployment or a branch deployment. If unset, will attempt to infer from the current git branch.

Options: full | branch

--agent-type \

Whether this a Hybrid or serverless code location.

Options: serverless | hybrid

-y, --yes

Skip confirmation prompts.

--git-url \

--commit-hash \

--location-name \

Name of the code location to set the build output for. Defaults to the current project’s code location, or every project’s code location when run in a workspace.

--status-url \

--snapshot-base-condition \

Options: on-create | on-update

--use-editable-dagster

Install all Dagster package dependencies from a local Dagster clone. The location of the local Dagster clone will be read from the DAGSTER_GIT_REPO_DIR environment variable.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

--verbose

Enable verbose output for debugging.

Environment variables:

DAGSTER_CLOUD_DEPLOYMENT

>

Provide a default for [`--deployment`](#cmdoption-dg-plus-deploy-deployment)

DAGSTER_CLOUD_ORGANIZATION

>

Provide a default for [`--organization`](#cmdoption-dg-plus-deploy-organization)

#### build-and-push

Builds a Docker image to be deployed, and pushes it to the registry

that was configured when the deploy session was started.

```shell

dg plus deploy build-and-push [OPTIONS]

```

Options:

--agent-type \

Whether this a Hybrid or serverless code location.

Options: serverless | hybrid

--python-version \

Python version used to deploy the project. If not set, defaults to the calling process’s Python minor version.

Options: 3.9 | 3.10 | 3.11 | 3.12

--location-name \

Name of the code location to set the build output for. Defaults to the current project’s code location, or every project’s code location when run in a workspace.

--use-editable-dagster

Install all Dagster package dependencies from a local Dagster clone. The location of the local Dagster clone will be read from the DAGSTER_GIT_REPO_DIR environment variable.

--target-path \

Specify a directory to use to load the context for this command. This will typically be a folder with a dg.toml or pyproject.toml file in it.

--verbose

Enable verbose output for debugging.

#### configure

Scaffold deployment configuration files for Dagster Plus.

If no subcommand is specified, will attempt to auto-detect the agent type from your

Dagster Plus deployment. If detection fails, you will be prompted to choose between

serverless or hybrid.

```shell

dg plus deploy configure [OPTIONS] COMMAND [ARGS]...

```

Options:

--git-provider \

Git provider for CI/CD scaffolding

Options: github | gitlab

--verbose

Enable verbose output for debugging.

##### hybrid

Scaffold deployment configuration for Dagster Plus Hybrid.

This creates:

- Dockerfile and build.yaml for containerization

- container_context.yaml with platform-specific config (k8s/ecs/docker)

- Required files for CI/CD based on your Git provider (GitHub Actions or GitLab CI)

```shell

dg plus deploy configure hybrid [OPTIONS]

```

Options:

--git-provider \

Git provider for CI/CD scaffolding

Options: github | gitlab

Container registry URL for Docker images (e.g., 123456789012.dkr.ecr.us-east-1.amazonaws.com/my-repo)

--python-version \

Python version used to deploy the project

Options: 3.9 | 3.10 | 3.11 | 3.12 | 3.13

--organization \

Dagster Plus organization name

--deployment \

Deployment name

--git-root \

Path to the git repository root

-y, --yes

Skip confirmation prompts

--use-editable-dagster

Install all Dagster package dependencies from a local Dagster clone. The location of the local Dagster clone will be read from the DAGSTER_GIT_REPO_DIR environment variable.

--verbose

Enable verbose output for debugging.

##### serverless

Scaffold deployment configuration for Dagster Plus Serverless.

This creates:

- Required files for CI/CD based on your Git provider (GitHub Actions or GitLab CI)

- Dockerfile and build.yaml for containerization (if –no-pex-deploy is used)

```shell

dg plus deploy configure serverless [OPTIONS]

```

Options:

--git-provider \

Git provider for CI/CD scaffolding

Options: github | gitlab

--python-version \

Python version used to deploy the project

Options: 3.9 | 3.10 | 3.11 | 3.12 | 3.13

--organization \

Dagster Plus organization name

--deployment \

Deployment name

--git-root \

Path to the git repository root

--pex-deploy, --no-pex-deploy

Enable PEX-based fast deploys (default: True). If disabled, Docker builds will be used.

-y, --yes